Uncertainty and Monte Carlo

Lecture 08

September 24, 2025

Review of Last Classes

Simulation

- “Mimic” data generation given a certain model for the relevant processes;

- Often requires some type of discretization (not always!)

Simulation Workflow

Simulation

- “Mimic” data generation given a certain model for the relevant processes;

- Often requires some type of discretization (not always!)

- More complex approaches (e.g. discrete-event simulation) for specific use cases.

Calibration and Validation

- Calibration: How do we select parameter values?

- Validation: Does the model adequately reproduce the system dynamics?

Questions?

Text: VSRIKRISH to 22333

Uncertainty and Systems Analysis

Systems and Uncertainty

- Deterministic models: uncertainty due to the separation between the “internals” of the system and the “external” environment.

- Stochastic models: additional uncertainty from internal stochasticity

Uncertainty and Models

Uncertainty can come from:

- Stochastic models;

- Uncertain external conditions (forcings);

- Uncertain parameters;

- Uncertain model dynamics.

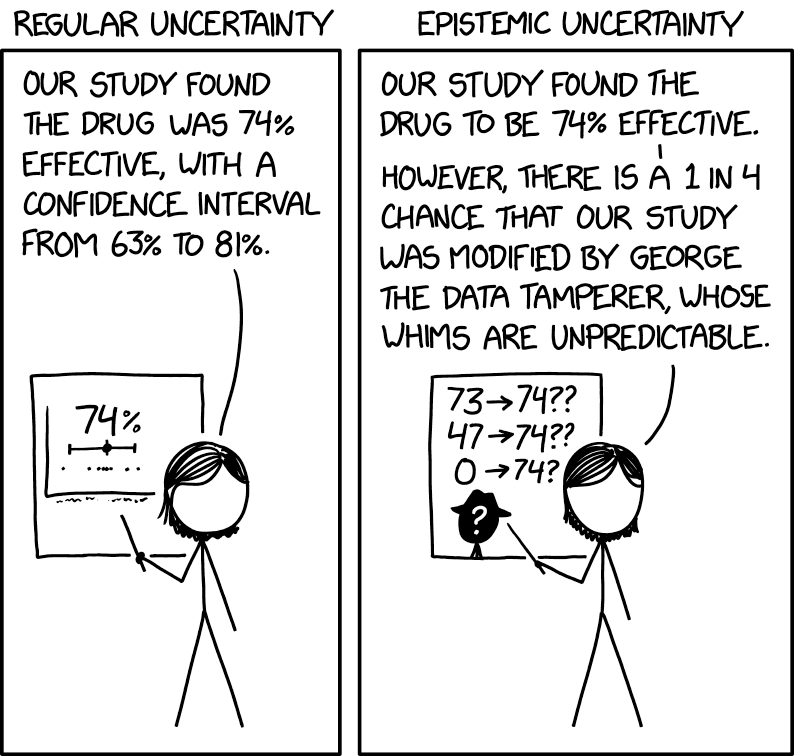

Types of Uncertainty

Two (broad) types of uncertainties:

- Aleatory uncertainty, or uncertainties resulting from randomness;

- Epistemic uncertainty, or uncertainties resulting from lack of knowledge.

Source: XKCD 2440

Probability

Axioms of Probability

Probability is a language for expressing uncertainty.

The axioms of probability are straightforward:

- \(E\) is some “event”: \(\mathcal{P}(E) \geq 0\);

- \(\Omega\) is the total event space: \(\mathcal{P}(\Omega) = 1\);

- \(\mathcal{P}(\cup_{i=1}^\infty E_i) = \sum_{i=1}^\infty \mathcal{P}(E_i)\) for disjoint (or “independent”) \(E_i\)

Probability Distributions

Probability distributions associate a probability to every event under consideration (the event space) and have to follow these axioms.

Key Features of Probability Distributions

- Mean/Mode (what events are “typical”)

- Skew (are larger or smaller events more or equally probable)

- Variance (how spread out is the distribution around the mode)

- Tail Probabilities (how probable are extreme events)

Common Distributions

- Gaussian / Normal

- Lognormal

- Binomial

- Uniform / Discrete Uniform

Probability Density Function

A continuous distribution \(\mathcal{D}\) has a probability density function (PDF) \(f_\mathcal{D}(x) = p(x | \theta)\).

The probability of \(x\) occurring in an interval \((a, b)\) is \[\mathbb{P}[a \leq x \leq b] = \int_a^b f_\mathcal{D}(x)dx.\]

Important: \(\mathbb{P}(x = x^*)\) is zero!

Probability Mass Functions

Discrete distributions have probability mass functions (PMFs) which are defined at point values, e.g. \(p(x = x^*) \neq 0\).

Cumulative Density Functions

If \(\mathcal{D}\) is a distribution with PDF \(f_\mathcal{D}(x)\), the cumulative density function (CDF) of \(\mathcal{D}\) is \(F_\mathcal{D}(x)\):

\[F_\mathcal{D}(x) = \int_{-\infty}^x f_\mathcal{D}(u)du.\]

Relationship Between PDFs and CDFs

Since \[F_\mathcal{D}(x) = \int_{-\infty}^x f_\mathcal{D}(u)du,\]

if \(f_\mathcal{D}\) is continuous at \(x\), the Fundamental Theorem of Calculus gives: \[f_\mathcal{D}(x) = \frac{d}{dx}F_\mathcal{D}(x).\]

Quantiles

The quantile function is the inverse of the CDF:

\[q(\alpha) = F^{-1}_\mathcal{D}(\alpha)\]

So \[x_0 = q(\alpha) \iff \mathbb{P}_\mathcal{D}(X < x_0) = \alpha.\]

Distributions Are Assumptions

Specifying a distribution is making an assumption about observations and any applicable constraints.

Examples: If your observations are…

- Continuous and fat-tailed? Cauchy distribution

- Continuous and bounded? Beta distribution

- Sums of positive random variables? Gamma or Normal distribution.

Selecting a Distribution

A distribution implicitly answers questions like:

- What is the most probable event? How much more likely is it than the others?

- Are larger or smaller events more, less, or equally probable?

- How probable are extreme events?

- Are different events correlated, or are they independent?

Probability Distribution Tails

The tails of distributions represent the probability of high-impact outcomes.

Key consideration: Small changes to these (low) probabilities can greatly influence risk.

“What Distribution Should I Use?”

There is no right answer to this, no matter what a statistical test tells you.

- What assumptions are justifiable?

- Particularly: think about tails, symmetry/skew

- What information do you have?

“What Distribution Should I Use?”

For example, suppose our data are counts of events:

- If you know something about rates, you can use a Poisson distribution

- If you know something about probabilities, you can use a Binomial distribution.

Conditional Probabilities

We often don’t want to just know if a particular event \(A\) has a certain probability, but also how other events (call them \(B\)) might depend on that outcome.

In other words:

We want the conditional probability of \(B\) given \(A\), denoted \(\mathbb{P}(B|A)\).

Conditional Probabilities

We can write conditional probabilities in terms of unconditional probabilities:

\[\mathbb{P}(B|A) = \frac{\mathbb{P}(BA)}{\mathbb{P}(A)}.\]

Monte Carlo

Stochastic Simulation

Monte Carlo simulation: Propagating random samples through a model to estimate a value (usually an expectation or a quantile).

Goals of Monte Carlo

Monte Carlo is a broad method, which can be used to:

- Obtain probability distributions of outputs;

- Estimate deterministic quantities.

Monte Carlo Simulation

Goal: Estimate \(\mathbb{E}_f\left[h(x)\right]\), \(x \sim f(x)\)

Monte Carlo principle:

- Sample \(x^1, x^2, \ldots, x^N \sim f(x)\)

- Estimate \[\mathbb{E}_f\left[h(x)\right] = \int_{x \in X} h(x)f(x)dx \approx \frac{1}{N} \sum_{n=1}^N h(x^n)\]

MC Example: Finding \(\pi\)

How can we use MC to estimate \(\pi\)?

Hint: Think of \(\pi\) as an expected value…

MC Example: Finding \(\pi\)

Finding \(\pi\) by sampling random values from the unit square and computing the fraction in the unit circle. This is an example of Monte Carlo integration.

\[\frac{\text{Area of Circle}}{\text{Area of Square}} = \frac{\pi}{4}\]

MC Example: Dice

What is the probability of rolling 4 dice for a total of 19?

Can simulate dice rolls and find the frequency of 19s among the samples.

MC Estimate of Quantiles

Would like to estimate the CDF \(F\) with some approximation \(\hat{F}_n\), then compute \(\hat{z}^\alpha_n = \hat{F}_n^{-1}(\alpha)\) as an estimator of the \(\alpha\)-quantile \(z^\alpha\).

Given samples \(\hat{\mathbf{y}} = y_1, \ldots, y_n \sim F\), define \[\hat{F}_n(y) = \frac{1}{n} \sum_{i=1}^n \mathbb{I}(y_i \leq y)\] and \(\hat{z}^\alpha_n = \hat{F}_n^{-1}(\alpha)\).

Monte Carlo Estimation

This type of estimation can be repeated with any simulation model that has a stochastic component.

For example, consider our dissolved oxygen model. Suppose that we have a probability distribution for the inflow DO.

How could we compute the probability of DO falling below the regulatory standard somewhere downstream?

Monte Carlo and Uncertainty Propagation

- Draw samples from some distribution;

- Run them through one or more models;

- Compute the (conditional) probability of outcomes of interest (for good or bad).

Key Takeaways

Key Takeaways

- Systems analysis features many uncertainties, which may be neglected with certain methods/model setups.

- Risk: Combination of hazard, exposure, vulnerability, and response.

- Choice of probability distribution can have large impacts on uncertainty and risk estimates: try not to use distributions just because they’re convenient.

- Monte Carlo: Estimate expected values of outcomes using simulation.

Upcoming Schedule

Next Classes

Monday: Monte Carlo Lab (clone before class, maybe instantiate environment too)

Wednesday: Why Does Monte Carlo Work?